Unlocking the Power of High-Bandwidth Memory: How HBM Interface Design is Revolutionizing AI Accelerators in 2025 and Beyond. Explore the Technologies, Market Growth, and Strategic Opportunities Shaping the Next Era of AI Hardware.

- Executive Summary: Key Findings and Strategic Insights

- Market Overview: HBM Interface Design for AI Accelerators in 2025

- Technology Landscape: Evolution of HBM Standards and Architectures

- Competitive Analysis: Leading Players and Innovation Trends

- Market Size and Forecast (2025–2030): CAGR, Revenue Projections, and Regional Breakdown

- Drivers and Challenges: Performance Demands, Power Efficiency, and Integration Complexities

- Emerging Applications: AI, HPC, Data Centers, and Edge Computing

- Supply Chain and Ecosystem Analysis

- Future Outlook: Disruptive Technologies and Next-Gen HBM Interfaces

- Strategic Recommendations for Stakeholders

- Sources & References

Executive Summary: Key Findings and Strategic Insights

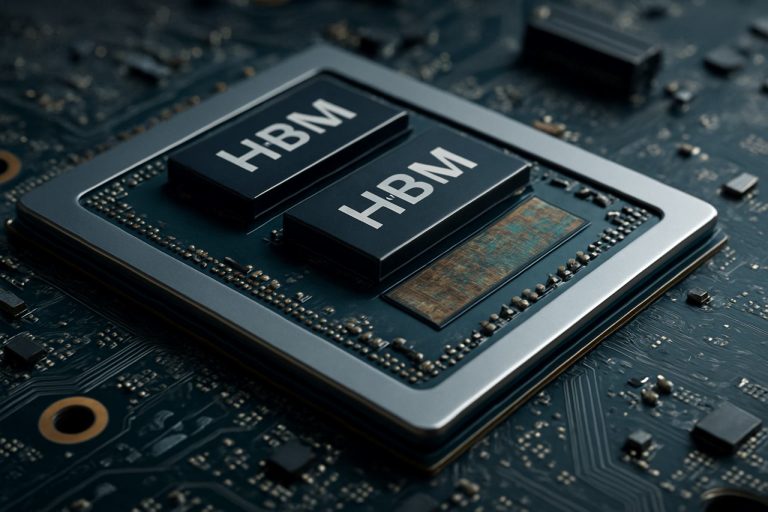

High-Bandwidth Memory (HBM) interface design has emerged as a critical enabler for next-generation AI accelerators, addressing the escalating demands for memory bandwidth, energy efficiency, and compact form factors in data-intensive applications. In 2025, the rapid evolution of AI workloads—particularly in deep learning and large language models—has intensified the need for memory solutions that can deliver terabytes per second of bandwidth while minimizing latency and power consumption. HBM, with its 3D-stacked architecture and through-silicon via (TSV) technology, has become the preferred memory interface for leading AI accelerator vendors.

Key findings indicate that the adoption of HBM3 and the anticipated rollout of HBM4 are setting new benchmarks in bandwidth, with HBM3 offering up to 819 GB/s per stack and HBM4 expected to surpass 1 TB/s. These advancements are enabling AI accelerators to process larger datasets in real time, significantly improving training and inference throughput. Major industry players such as Samsung Electronics Co., Ltd., Micron Technology, Inc., and SK hynix Inc. are at the forefront of HBM innovation, collaborating closely with AI hardware designers to optimize interface protocols and signal integrity.

Strategic insights reveal that successful HBM interface design hinges on several factors: advanced packaging techniques (such as 2.5D and 3D integration), robust thermal management, and the co-design of memory controllers with AI processing cores. Companies like Advanced Micro Devices, Inc. and NVIDIA Corporation are leveraging these strategies to deliver AI accelerators with unprecedented performance-per-watt metrics. Furthermore, the integration of HBM with chiplet-based architectures is gaining traction, offering modularity and scalability for future AI systems.

Looking ahead, the HBM interface ecosystem is expected to benefit from standardization efforts led by organizations such as the JEDEC Solid State Technology Association, which are streamlining interoperability and accelerating time-to-market for new memory solutions. As AI models continue to grow in complexity, the strategic alignment of HBM interface design with evolving AI workloads will be essential for maintaining competitive advantage in the AI hardware landscape.

Market Overview: HBM Interface Design for AI Accelerators in 2025

The market for High-Bandwidth Memory (HBM) interface design in AI accelerators is poised for significant growth in 2025, driven by the escalating computational demands of artificial intelligence and machine learning workloads. HBM, a 3D-stacked DRAM technology, offers substantial improvements in memory bandwidth and energy efficiency compared to traditional memory solutions, making it a critical enabler for next-generation AI accelerators.

In 2025, the adoption of HBM interfaces is being accelerated by leading semiconductor companies and hyperscale data center operators seeking to overcome the memory bottlenecks inherent in AI training and inference tasks. The latest HBM standards, such as HBM3 and the emerging HBM3E, deliver bandwidths exceeding 1 TB/s per device, supporting the parallel processing requirements of large language models and generative AI systems. Companies like Samsung Electronics Co., Ltd., Micron Technology, Inc., and SK hynix Inc. are at the forefront of HBM development, supplying memory solutions that are being rapidly integrated into AI accelerator designs.

The interface design for HBM in AI accelerators is increasingly complex, requiring advanced packaging technologies such as 2.5D and 3D integration. These approaches enable the close physical proximity of HBM stacks to processing units, minimizing latency and maximizing data throughput. Semiconductor foundries like Taiwan Semiconductor Manufacturing Company Limited (TSMC) and Intel Corporation are investing in advanced interposer and packaging solutions to support these architectures.

Demand for HBM-enabled AI accelerators is particularly strong in cloud data centers, edge computing, and high-performance computing (HPC) sectors. Major AI chip vendors, including NVIDIA Corporation and Advanced Micro Devices, Inc. (AMD), are integrating HBM into their flagship products to deliver the memory bandwidth necessary for state-of-the-art AI models. The competitive landscape is further shaped by evolving standards from organizations such as JEDEC Solid State Technology Association, which continue to define new HBM specifications to meet future AI requirements.

Overall, the HBM interface design market for AI accelerators in 2025 is characterized by rapid innovation, strategic partnerships across the semiconductor supply chain, and a relentless focus on bandwidth, power efficiency, and scalability to support the next wave of AI advancements.

Technology Landscape: Evolution of HBM Standards and Architectures

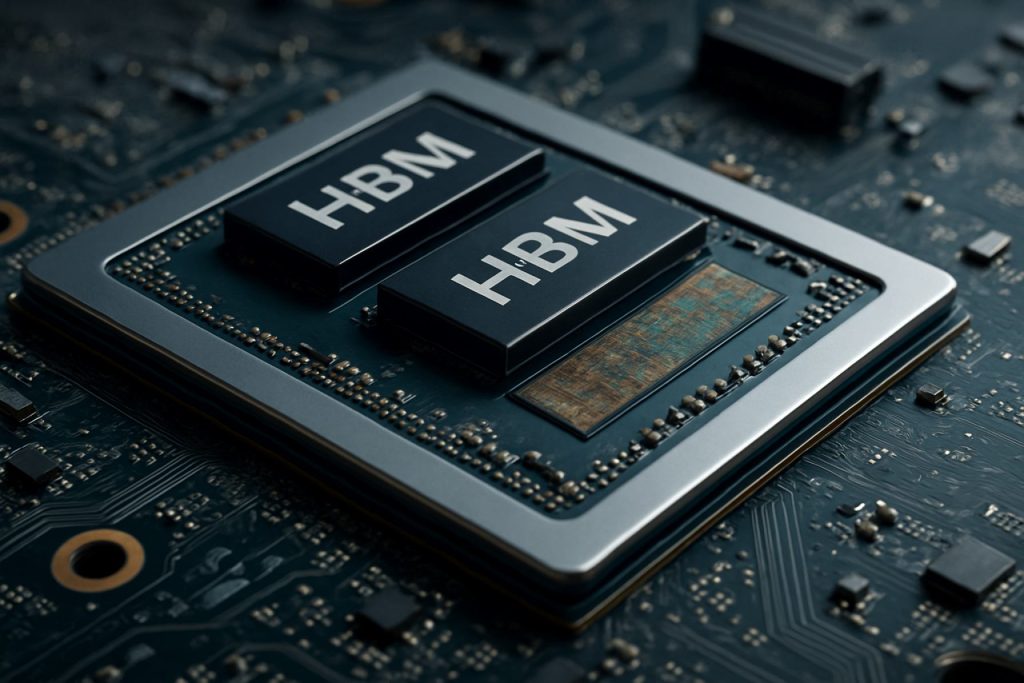

The technology landscape for High-Bandwidth Memory (HBM) interface design has rapidly evolved to meet the escalating demands of AI accelerators. Since its introduction, HBM has undergone several generational improvements, each iteration delivering higher bandwidth, increased capacity, and enhanced energy efficiency. The initial HBM standard, developed by Samsung Electronics Co., Ltd. and SK hynix Inc. in collaboration with Advanced Micro Devices, Inc. (AMD), set the stage for stacking DRAM dies vertically and connecting them with through-silicon vias (TSVs), enabling wide I/O and low power consumption.

HBM2 and HBM2E standards, ratified by JEDEC Solid State Technology Association, further increased per-stack bandwidth and capacity, supporting up to 3.6 Gbps per pin and 16 GB per stack. These improvements were critical for AI accelerators, which require rapid access to large datasets and models. The latest HBM3 standard, introduced in 2022, pushes bandwidth to over 6.4 Gbps per pin and stack capacities up to 24 GB, with Micron Technology, Inc. and SK hynix Inc. among the first to announce HBM3 products. HBM3E, expected to be widely adopted in 2025, is projected to deliver even higher speeds and improved thermal management, addressing the needs of next-generation AI workloads.

Architecturally, the integration of HBM with AI accelerators has shifted from traditional side-by-side PCB placement to 2.5D and 3D packaging, such as silicon interposers and advanced chiplet designs. NVIDIA Corporation and AMD have pioneered the use of HBM in their GPUs and AI accelerators, leveraging these packaging techniques to minimize signal loss and maximize memory bandwidth. The adoption of co-packaged optics and on-die memory controllers is also emerging, further reducing latency and power consumption.

Looking ahead to 2025, the HBM interface design landscape is characterized by a focus on scalability, energy efficiency, and integration flexibility. Industry leaders are collaborating on new standards and reference architectures to ensure interoperability and to support the exponential growth in AI model size and complexity. As AI accelerators continue to push the boundaries of performance, the evolution of HBM standards and architectures remains a cornerstone of innovation in the field.

Competitive Analysis: Leading Players and Innovation Trends

The competitive landscape for High-Bandwidth Memory (HBM) interface design in AI accelerators is rapidly evolving, driven by the escalating demands of artificial intelligence workloads and the need for efficient, high-speed data transfer between memory and processing units. Key industry players such as Samsung Electronics Co., Ltd., Micron Technology, Inc., and SK hynix Inc. dominate the HBM manufacturing sector, each introducing successive generations of HBM (HBM2E, HBM3, and beyond) with increased bandwidth, capacity, and energy efficiency.

On the AI accelerator front, companies like NVIDIA Corporation and Advanced Micro Devices, Inc. (AMD) have integrated HBM into their flagship GPUs and data center accelerators, leveraging the memory’s wide interface and 3D stacking to minimize bottlenecks in deep learning and high-performance computing applications. Intel Corporation has also adopted HBM in its AI and HPC products, focusing on optimizing the interface for lower latency and higher throughput.

Innovation trends in HBM interface design are centered on maximizing bandwidth while reducing power consumption and physical footprint. Techniques such as advanced through-silicon via (TSV) architectures, improved signal integrity, and dynamic voltage/frequency scaling are being implemented to address these challenges. The adoption of HBM3 and the development of HBM4 standards, led by the JEDEC Solid State Technology Association, are pushing the boundaries of memory bandwidth, with HBM3 targeting speeds exceeding 800 GB/s per stack and HBM4 expected to surpass this significantly.

Another notable trend is the co-packaging of HBM with AI accelerators using advanced packaging technologies like 2.5D and 3D integration. Taiwan Semiconductor Manufacturing Company Limited (TSMC) and Amkor Technology, Inc. are at the forefront of providing these packaging solutions, enabling closer integration and improved thermal management. This co-design approach is critical for next-generation AI systems, where memory bandwidth and proximity to compute units directly impact performance and efficiency.

In summary, the competitive dynamics in HBM interface design for AI accelerators are shaped by rapid innovation, strategic partnerships between memory and chip manufacturers, and the relentless pursuit of higher bandwidth and lower power solutions to meet the needs of AI-driven workloads.

Market Size and Forecast (2025–2030): CAGR, Revenue Projections, and Regional Breakdown

The market for High-Bandwidth Memory (HBM) interface design in AI accelerators is poised for robust growth between 2025 and 2030, driven by escalating demand for high-performance computing in artificial intelligence, machine learning, and data center applications. The global HBM interface market is projected to register a compound annual growth rate (CAGR) of approximately 25–30% during this period, reflecting the rapid adoption of HBM-enabled AI accelerators in both enterprise and cloud environments.

Revenue projections indicate that the market size, valued at an estimated $2.5 billion in 2025, could surpass $7.5 billion by 2030. This surge is attributed to the increasing integration of HBM in next-generation AI chips, which require ultra-fast memory interfaces to handle massive parallel processing workloads. Key industry players such as Samsung Electronics Co., Ltd., Micron Technology, Inc., and SK hynix Inc. are investing heavily in R&D to advance HBM interface technologies, further fueling market expansion.

Regionally, Asia-Pacific is expected to dominate the HBM interface design market, accounting for over 45% of global revenue by 2030. This dominance is underpinned by the presence of major semiconductor foundries and memory manufacturers in countries such as South Korea, Taiwan, and China. North America follows closely, driven by the concentration of AI accelerator developers and hyperscale data centers in the United States and Canada. Europe is also witnessing steady growth, particularly in automotive AI and industrial automation sectors.

The proliferation of AI-driven applications in edge computing, autonomous vehicles, and high-frequency trading is further accelerating the adoption of advanced HBM interfaces. As AI models become more complex and data-intensive, the need for higher memory bandwidth and lower latency is pushing chip designers to adopt HBM2E, HBM3, and emerging HBM4 standards, as defined by the JEDEC Solid State Technology Association.

In summary, the HBM interface design market for AI accelerators is set for significant expansion through 2030, with strong regional growth in Asia-Pacific and North America, and a clear trend toward higher bandwidth and more energy-efficient memory solutions.

Drivers and Challenges: Performance Demands, Power Efficiency, and Integration Complexities

The rapid evolution of artificial intelligence (AI) workloads has placed unprecedented demands on memory subsystems, making High-Bandwidth Memory (HBM) a critical enabler for next-generation AI accelerators. The design of HBM interfaces must address several key drivers and challenges, particularly in the areas of performance, power efficiency, and integration complexity.

Performance Demands: AI accelerators require massive memory bandwidth to feed data-hungry compute engines, especially for deep learning models with billions of parameters. HBM, with its 3D-stacked architecture and wide I/O, offers bandwidths exceeding 1 TB/s in the latest generations. However, achieving this in practice requires careful interface design to minimize latency, maximize throughput, and ensure signal integrity at high data rates. The interface must also support efficient data movement patterns typical of AI workloads, such as large matrix multiplications and tensor operations, which place additional stress on memory controllers and interconnects.

Power Efficiency: As AI accelerators scale, power consumption becomes a critical constraint, both for data center deployments and edge applications. HBM’s proximity to the processor and its use of through-silicon vias (TSVs) reduce energy per bit compared to traditional DDR memory. Nonetheless, the interface design must further optimize power by employing advanced signaling techniques, dynamic voltage and frequency scaling, and intelligent power management. Balancing high bandwidth with low power operation is a persistent challenge, especially as memory stacks grow in capacity and speed. Organizations like Samsung Electronics Co., Ltd. and Micron Technology, Inc. are actively developing new HBM generations with improved energy efficiency.

Integration Complexities: Integrating HBM with AI accelerators involves significant packaging and system-level challenges. The physical stacking of memory dies and their connection to the processor via silicon interposers or advanced substrates require precise manufacturing and thermal management. Signal integrity, electromagnetic interference, and mechanical stress must all be addressed to ensure reliable operation. Furthermore, the interface must be compatible with evolving standards, such as those defined by JEDEC Solid State Technology Association, to ensure interoperability and future scalability. The complexity increases as accelerators adopt chiplet-based architectures, necessitating robust HBM interface solutions that can support heterogeneous integration.

In summary, the design of HBM interfaces for AI accelerators in 2025 is shaped by the need to deliver extreme bandwidth, maintain power efficiency, and manage the intricacies of advanced integration, all while keeping pace with the rapid innovation in AI hardware.

Emerging Applications: AI, HPC, Data Centers, and Edge Computing

The rapid evolution of artificial intelligence (AI), high-performance computing (HPC), data centers, and edge computing is driving unprecedented demand for memory bandwidth and efficiency. High-Bandwidth Memory (HBM) interface design has become a cornerstone technology for AI accelerators, enabling the massive parallelism and data throughput required by modern deep learning and analytics workloads. HBM achieves this by stacking multiple DRAM dies vertically and connecting them with through-silicon vias (TSVs), resulting in significantly higher bandwidth and lower power consumption compared to traditional memory interfaces.

In AI accelerators, such as those developed by NVIDIA Corporation and Advanced Micro Devices, Inc. (AMD), HBM interfaces are critical for feeding data to thousands of processing cores without bottlenecks. The latest HBM3 and HBM3E standards, as defined by JEDEC Solid State Technology Association, support bandwidths exceeding 1 TB/s per stack, which is essential for training large-scale neural networks and real-time inference in data centers. These interfaces are tightly integrated with the accelerator die using advanced packaging techniques such as 2.5D and 3D integration, minimizing signal loss and latency.

In HPC environments, HBM’s high bandwidth and energy efficiency are leveraged to accelerate scientific simulations, financial modeling, and other data-intensive tasks. Supercomputers like those built by Cray Inc. and Fujitsu Limited utilize HBM-enabled processors to achieve petascale and exascale performance targets. The interface design must address challenges such as signal integrity, thermal management, and error correction to ensure reliable operation under extreme workloads.

Edge computing devices, which require compact form factors and low power consumption, are also beginning to adopt HBM interfaces. Companies like Samsung Electronics Co., Ltd. and SK hynix Inc. are developing HBM solutions tailored for edge AI chips, balancing bandwidth needs with stringent power and thermal constraints.

Looking ahead to 2025, the continued refinement of HBM interface design will be pivotal for supporting the next generation of AI, HPC, and edge applications. Innovations in packaging, signaling, and memory controller architectures will further enhance the scalability and efficiency of AI accelerators, ensuring that memory bandwidth keeps pace with the exponential growth in computational demand.

Supply Chain and Ecosystem Analysis

The supply chain and ecosystem for High-Bandwidth Memory (HBM) interface design in AI accelerators is characterized by a complex network of semiconductor manufacturers, memory suppliers, foundries, and design tool providers. HBM, with its vertically stacked DRAM dies and wide interface, is a critical enabler for AI accelerators, offering the bandwidth and energy efficiency required for large-scale machine learning workloads. The design and integration of HBM interfaces demand close collaboration between memory vendors, such as Samsung Electronics Co., Ltd., Micron Technology, Inc., and SK hynix Inc., and leading AI chip designers like NVIDIA Corporation and Advanced Micro Devices, Inc. (AMD).

The ecosystem is further supported by advanced packaging and interconnect technologies, such as silicon interposers and 2.5D/3D integration, provided by foundries like Taiwan Semiconductor Manufacturing Company Limited (TSMC) and Intel Corporation. These foundries enable the physical integration of HBM stacks with logic dies, ensuring signal integrity and thermal management at high data rates. EDA tool providers, including Synopsys, Inc. and Cadence Design Systems, Inc., offer specialized IP and verification solutions to address the stringent timing, power, and reliability requirements of HBM interfaces.

Standardization efforts, led by organizations such as JEDEC Solid State Technology Association, play a pivotal role in defining HBM interface specifications and ensuring interoperability across the supply chain. The rapid evolution of HBM standards (e.g., HBM3, HBM3E) requires ecosystem participants to continuously update their design flows and manufacturing processes. Additionally, the growing demand for AI accelerators in data centers and edge devices is driving investments in capacity expansion and supply chain resilience, as seen in recent announcements from major memory and foundry partners.

In summary, the HBM interface design ecosystem for AI accelerators in 2025 is marked by deep interdependencies among memory suppliers, chip designers, foundries, EDA vendors, and standards bodies. This collaborative environment is essential for delivering the high-performance, energy-efficient memory subsystems that underpin next-generation AI workloads.

Future Outlook: Disruptive Technologies and Next-Gen HBM Interfaces

The future of High-Bandwidth Memory (HBM) interface design for AI accelerators is poised for significant transformation, driven by disruptive technologies and the evolution of next-generation HBM standards. As AI workloads continue to demand higher memory bandwidth and lower latency, the industry is moving beyond HBM2E and HBM3 towards even more advanced solutions such as HBM3E and early research into HBM4. These new standards promise to deliver unprecedented data rates, with HBM3E targeting speeds up to 9.2 Gbps per pin and total bandwidths exceeding 1.2 TB/s per stack, a critical leap for AI training and inference at scale (Samsung Electronics).

Disruptive interface technologies are also emerging to address the challenges of signal integrity, power delivery, and thermal management inherent in stacking more memory dies and increasing I/O density. Innovations such as advanced through-silicon via (TSV) architectures, improved interposer materials, and the adoption of chiplet-based designs are enabling tighter integration between AI accelerators and HBM stacks. For example, the use of silicon bridges and organic interposers is being explored to reduce cost and improve scalability, while maintaining the high-speed signaling required by next-gen HBM (Advanced Micro Devices, Inc.).

Looking ahead, the integration of HBM with emerging AI accelerator architectures—such as those leveraging 2.5D and 3D packaging—will further blur the lines between memory and compute. This co-packaging approach is expected to minimize data movement, reduce energy consumption, and unlock new levels of parallelism for large language models and generative AI workloads. Industry leaders are also collaborating on new interface protocols and error correction schemes to ensure reliability and scalability as memory bandwidths soar (Micron Technology, Inc.).

In summary, the future of HBM interface design for AI accelerators will be shaped by rapid advances in memory technology, packaging innovation, and system-level co-design. These developments are set to redefine the performance envelope for AI hardware in 2025 and beyond, enabling the next wave of breakthroughs in machine learning and data analytics.

Strategic Recommendations for Stakeholders

As AI accelerators increasingly rely on high-bandwidth memory (HBM) to meet the demands of large-scale machine learning and deep learning workloads, stakeholders—including chip designers, system integrators, and data center operators—must adopt forward-looking strategies to optimize HBM interface design. The following recommendations are tailored to address the evolving landscape of HBM integration in AI hardware for 2025 and beyond.

- Prioritize Co-Design of Memory and Compute: Collaborative development between memory and compute teams is essential. By co-optimizing the HBM interface with the AI accelerator’s architecture, stakeholders can minimize latency and maximize throughput. Companies like Samsung Electronics Co., Ltd. and Micron Technology, Inc. have demonstrated the benefits of such integrated approaches in their latest HBM solutions.

- Adopt Latest HBM Standards: Staying current with the latest HBM standards, such as HBM3 and emerging HBM4, ensures compatibility and access to higher bandwidths and improved power efficiency. The JEDEC Solid State Technology Association regularly updates these standards, and early adoption can provide a competitive edge.

- Invest in Advanced Packaging Technologies: 2.5D and 3D integration, such as silicon interposers and through-silicon vias (TSVs), are critical for efficient HBM interface design. Collaborating with packaging specialists like Taiwan Semiconductor Manufacturing Company Limited (TSMC) can help stakeholders leverage cutting-edge interconnect solutions.

- Optimize Power Delivery and Thermal Management: As HBM stacks grow in density and speed, power delivery and heat dissipation become more challenging. Stakeholders should invest in advanced power management ICs and innovative cooling solutions, working with partners such as CoolIT Systems Inc. for thermal management.

- Foster Ecosystem Collaboration: Engaging with industry consortia and standards bodies, such as OIF (Optical Internetworking Forum), can help stakeholders stay informed about interface innovations and interoperability requirements.

By implementing these strategic recommendations, stakeholders can ensure that their HBM interface designs for AI accelerators remain robust, scalable, and future-proof, supporting the next generation of AI workloads.

Sources & References

- Micron Technology, Inc.

- NVIDIA Corporation

- JEDEC Solid State Technology Association

- Amkor Technology, Inc.

- Cray Inc.

- Fujitsu Limited

- Synopsys, Inc.

- OIF (Optical Internetworking Forum)